Development of a distributed graph backend for a logistics system

Overview

In today's world Logistics is considered as the backbone of an economy. Being the fastest evolving industry the Indian logistics sector is currently growing at a rate of 10.5% CAGR since 2017 and estimated to be of $225 Bn by the end of 2022.

Hence, there is an upsurge in demand of efficient algorithms for packing & routing in the several logistics systems running at a national, state, local & hyper-local level

Along with the need of such ground-breaking algorithms, that the Logistics Lab at IIT Madras is working upon, also arises the need to create a software layer that interacts with the users & the developers of the application in the most seamless manner possible.

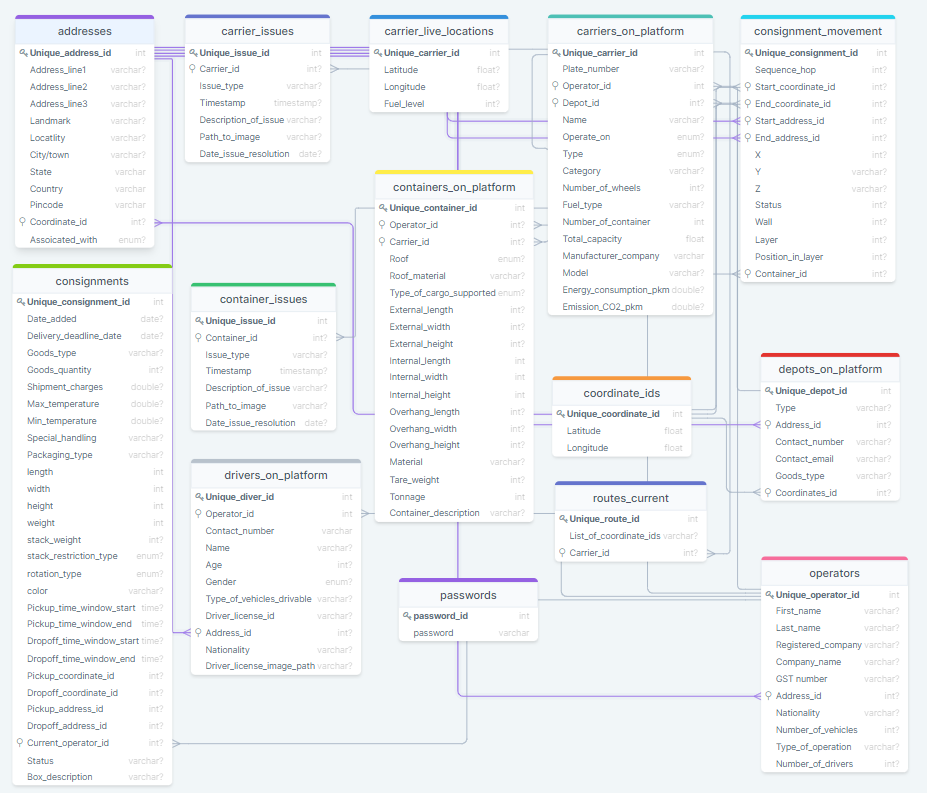

The logistics sector is quite convoluted with multiple stakeholders involved in it interconnecting with each other on multiple levels. Hence, the approach was picked to view each entity in this system as a node in a graph to create a software layer that can adequately study the relations between them.

Goals

The project was divided into 2 main goals

- Develop a framework for modeling various entities in the graph-based network proposed, & simulate the interactions between them with generated data that abides certain constraints observed in real world scenarios.

- Set up a distributed system infrastructure that is redundant, scalable, cost-optimized & highly performant.

Apart from these there were several other objectives such as forming & training a team, setting up development environment & CI/CD pipelines & documentation of the entire process that I undertook as part of this project.

A High Level Design Overview

A high level overview of the system would be as follows :

- Databases -

- 1 Master database that stores all the data for the graph entities in a structured format.

- 1 Database that stores the information coming from Maps API for hitting the paid API endpoints only when new data is asked for

- 1 key-value database that handles authentication, authorization & user session management.

- Business logic server - The main server that :

- Sets up the graph based connectivity across various tables in our database & exposes a set of endpoints to granularly understand the relations between these data points & perform calculations & manipulations over them,

- A server for managing authentication & authorization with inbuilt role-based access control set up for finer management of roles

- Communicates with other microservices in our architecture

- Miscellaneous services used :

- API documentation - For easy understanding of the available endpoints & mock request-responses to integrate easily

- Containerization - For extracting server logic into reusable software chunks so that scaling the system is as easy as spinning up another container running similar code.

- Cloud provider - For hosting our servers & website in the initial days of building the MVP.

- UI Prototyping - To mock each design & debate feature utility before developing the same.

- Remote Version Control System - To collaborate on developing different features with different developers in parallel,

- CI/CD - For automation of repititive tasks, via usage of webhooks over events on code repositories. This also involved setup of cron jobs over remote servers.

Below img displays a systems diagram for entire software stack developed over the project course

Tech Stack

- React UI library used for developing our frontend - a responsive web-application

- React Query - A client for connecting the React state changes with the API endpoints written in GraphQL. Basically it’s the intermediary to interpret UI component functions performed and pass them to the APIs for resolving.

- TypegraphQL APIs - APIs written in GraphQL (with added type definitions) :

- GraphQL is self documenting - Helps save developer effort in documenting each API endpoint

- Helps reduce latency and load by selective querying

- Scales very well with data points

- Scale really well with wide column data entries

- TypeORM - A simple Object Relational Mapping (ORM) to convert the CRUD requests from our API into the format that the connected database can understand, in this case, it means converting the queries (functions to read data) and mutations (functions to manipulate data) of our GraphQL endpoints into SQL queries that our Postgres database can interpret

- Postgres - An open-source simple SQL based database with a fast, powerful engine.

- Redis - Redis is an open source (BSD licensed), in-memory data structure store used as an auth database here owing to its high speed.

- Docker - The backbone of the entire CI/CD pipeline that makes setting up the entire architecture very seamless with fast software updates & production deployment rollouts

- GitHub Actions - For CI/CD with docker image tagging & publishing on feature releases

- Docker-compose - The Infrastructure as Code solution that makes the entire architecture easily understandable & scalable to infinite extent

- MongoDB - A noSQL database used here as the spatial database holding all the variable information that is received from Google Maps API & storing it for fast, easy & scalable access.

- Express - A node JS HTTP framework used here for creating APIs for authentication, session management & creating spatial API endpoints.

- Language used for development is TypeScript

This convoluted, yet beautifully intricate tech stack was achieved as a result of multiple iterations over the problem statement & each blocker we had run into while we were developing the same.

Through this report, I will be going over the key software engineering decisions taken over 4 major areas encompassing the architecture of this entire pipeline :

PART 1 : API structure of stakeholder entities with TypeGraphQL, TypeORM

A : Sample CRUD Endpoints

-

In this section, we discuss how the below portrayed interactions between various stakeholders of an atomic Logistics System network was put on a graph based network, & the engineering benefits associated with it

-

Database connections are made through environment configuration variables that differ for development & production. In this section, the main databases we’re connecting to is :

Postgres - For storing structured, well-defined that each entity constitutes of

-

The documentation for the entire backend can be found in a Postman collection with multiplie environment configuration & mock request/response setups that I have done while I was testing each API endpoint

-

We start of with a sample code of an entity “Coordinates" where, just by defining a simple class in TypeScript, we are able to actually create a table called

coordinatesthat has the columns as defined as properties in the class// src/entity/Coordinate.ts import { Entity, BaseEntity, PrimaryColumn, Column } from "typeorm"; import { ObjectType, Field, ID, Float } from "type-graphql"; @Entity("coordinates") @ObjectType() export class Coordinates extends BaseEntity { @Field(() => ID) @PrimaryColumn() readonly id:string; @Field(() => Float) @Column({ type: "float" }) latitude: number; @Field(() => Float) @Column({ type: "float" }) longitude:number; } -

We now write an API resolver over this model/entity/schema that helps us query this specific table with standard Create, Read, Update, Delete (CRUD) features + any miscellaneous functionalities that we wish to add

-

Witnessing a repeated need for the same CRUD functionalities required for each of the 15 stakeholder entities, I created an

EntityBaseResolverfunction that simply takes in the details of the entity & returns aBaseResolverclass with CRUD endpoints enabled over it.

TheCoordinatesResolvernow simply extends thisEntityBaseResolverand if required, provides additional features other than CRUD over it -

Here is the code for the

EntityBaseResolverfile. I have also added Authentication & Role Based Access Control over each endpoints inside the@UseMiddlewaremethod decorator of this Resolver classimport { getConnection } from 'typeorm'; import { Arg, ClassType, Mutation, Query, Resolver, UseMiddleware } from "type-graphql"; import { Middleware } from "type-graphql/dist/interfaces/Middleware"; import { v4 as uuidv4 } from 'uuid'; import { isAuthn } from '../middleware/isAuthn'; import { isAdmin, isDriver } from '../middleware/isAuthz'; function EntityBaseResolver<X extends ClassType,Y extends ClassType>({ suffix, tableName, entity, createInputType, updateInputType, middleware }: { suffix: string, tableName:string, createInputType: X, updateInputType: Y, entity: any, middleware?: Middleware<any>[], } ) { @Resolver({isAbstract:true}) abstract class BaseResolver { @Query(() => [entity], { name: `getAll${suffix}` }) @UseMiddleware(isAuthn,...(middleware || [])) async getAll():Promise<typeof entity[]> { let connection = await getConnection() .createQueryBuilder(entity, tableName) return connection.getMany() } @Query(() => entity, { name: `get${suffix}ById` }) @UseMiddleware(isAuthn,...(middleware || [])) async getById(@Arg('id') id:string):Promise<typeof entity> { let connection = await getConnection() .getRepository(entity) .createQueryBuilder(tableName) .where(`${tableName}.id = :id`, {id}) const entry = connection.getOne(); if (!entry) throw new Error(`${suffix} not found!`); return entry; } @Mutation(() => entity, { name: `create${suffix}` }) @UseMiddleware(isAuthn,...(middleware || [])) async create(@Arg("data", () => createInputType) data: typeof createInputType):Promise<typeof entity> { const id = uuidv4(); const entry = {['id']:id, ...data} return entity.create(entry).save(); } @Mutation(() => entity, { name: `update${suffix}` }) @UseMiddleware(isAuthn,...(middleware || [])) async update(@Arg("data", () => updateInputType) data: typeof updateInputType, @Arg('id') id:string):Promise<typeof entity> { const entry = await entity.findOne({ where: { [`id`]: id } }); if (!entry) throw new Error(`${suffix} not found!`); Object.assign(entry, data); await entry.save(); return entry; } @Mutation(() => entity, { name: `delete${suffix}` }) @UseMiddleware(isAuthn,...(middleware || [])) async delete(@Arg('id') id:string): Promise<typeof entity> { const entry = await entity.findOne({ where: { [`id`]:id } }); if (!entry) throw new Error(`${suffix} not found!`); await entry.remove(); return {id}; } } return BaseResolver; } export default EntityBaseResolver; -

All our

CoordinateResolverdoes now is extending this BaseResolver returned to provide simple CRUD endpoints. -

Thus, we have succeeded in defining & handling the table definition with TypeORM, CRUD endpoints with TypeGraphQL, all made possible through defining just some basic decorated TypeScript classes.

-

Here’s the sample Postman response for a POST request our

Coordinatesto create a new row in thecoordinatestable in our database

B : The Graph Relations

-

Relation mapping between different tables of our database was an engineering challenge that I made structured & streamlined using TypeORM’s relation mapping & a custom code dependency injection framework I developed as part of my research, specifically for handling the interactions between these entities more structuredly & intuitively

-

Say for instance the

Addressentity, which has aManyToOnemapping against myCoordinatesentity. We mention this using the TypeORM relation mapper with the Foreign Key. Also, this is created as a unidirectional relationship as per the product requirement// src/entity/Address.ts import { Field, ID, ObjectType, registerEnumType } from "type-graphql"; import { BaseEntity, Column, Entity, JoinColumn, ManyToOne, PrimaryColumn, RelationId } from "typeorm"; import { Coordinates } from "../coordinate/Coordinate.entity"; export enum AssociatedEntity{ Consignor = "consignor", Consignee = "consignee", Depot = "depot", Operator = "operator", Driver = "driver" } registerEnumType(AssociatedEntity, { name: "AssociatedEntity", // this one is mandatory description: "The entity associated with the transport activity", // this one is optional }); @Entity("addresses") @ObjectType() export class Address extends BaseEntity{ @Field(() => ID) @PrimaryColumn() readonly id: string; @Field(() => String) @Column("varchar", { length: 45 }) address_line_1: string; ........ @Field(type =>Coordinates) @ManyToOne(type => Coordinates) @JoinColumn({ name: "coordinate_id", referencedColumnName:"id" }) coordinates: Coordinates; @RelationId((address: Address) => address.coordinates) coordinate_id: string; @Field(() => AssociatedEntity) @Column({ type: "enum", enum: AssociatedEntity, default: AssociatedEntity.Driver }) associatedEntity: AssociatedEntity // Write a custom pattern for this @Field(() => String) @Column("varchar", { length: 45 }) pincode: string; } -

The above snippet also shows how gracefully Enumeration types have been handled in the backend & the database so that we have no data validation issues on integrations later

-

Next, we define the

AddressResolver, equipping basic CRUD functionalities with our coreEntityBaseResolver& then using our custom inversion-of-control framework to inject connection to theCoordinatesentity & return joint data.

These joins also have been equipped with thorough validation checks & error fallbacks to prevent any data inconsistency in the databaseimport { Repository } from 'typeorm'; import { Coordinates } from '../coordinate/Coordinate.entity'; // src/resolvers/AddressResolver.ts import { FieldResolver, Resolver, Root } from "type-graphql"; import { Address } from "./Address.entity"; import { CreateAddressInput } from "./AddressInput/CreateAddressInput"; import { UpdateAddressInput } from './AddressInput/UpdateAddressInput'; import { InjectRepository } from 'typeorm-typedi-extensions'; import { Service } from 'typedi'; import EntityBaseResolver from '../../abstractions/EntityBaseResolver'; const AddressBaseResolver = EntityBaseResolver({ suffix: "Address", tableName: "address", entity: Address, createInputType: CreateAddressInput, updateInputType: UpdateAddressInput, }) @Service() @Resolver(of => Address) export class AddressResolver extends AddressBaseResolver { constructor(@InjectRepository(Coordinates) private readonly coordinatesRepository: Repository<Coordinates>) {super() } @FieldResolver() async coordinates(@Root() address: Address): Promise<Coordinates> { return (await this.coordinatesRepository.findOne(address.coordinate_id))!; } } -

Here’s how, we were able to develop the 1st in-house technology of the lab, a seamless, scalable & data-consistent framework for creating configured API modules for various entities in a graph-based backend system

-

The codebase for developing each entity is 90% lesser than any other competing framework out there, making the abstractions we’ve used light, powerful & abiding with the SOLID principles of Object Oriented Design

-

The true power of this system is seen in executing complex queries. Below attached is the screenshot of the custom detailing of information we can get while executing a POST request to

CreateConsignmentfor theConsignmententity in theconsignmentstable in our database, & getting the granular level information about each of its related entity mappings in theAddress,Coordinate&Operatorentity

-

And yet the code for heavy lifting CRUD on such a wide column table, inter-related to multiple other tables is under 50 lines (90% less than any other marketframework)!

import { ContainerOnPlatform } from '../containerOnPlatform/ContainerOnPlatform.entity'; // src/resolvers/ConsignmentMovementResolver.ts import { Resolver, Query, Mutation, Arg, FieldResolver, Root } from "type-graphql"; import { Service } from "typedi"; import { InjectRepository } from "typeorm-typedi-extensions"; import EntityBaseResolver from "../../abstractions/EntityBaseResolver"; import { Address } from "../address/Address.entity"; import { Coordinates } from "../coordinate/Coordinate.entity"; import { ConsignmentMovement } from "./ConsignmentMovement.entity"; import { CreateConsignmentMovementInput } from "./ConsignmentMovementInput/CreateConsignmentMovementInput"; import { UpdateConsignmentMovementInput } from "./ConsignmentMovementInput/UpdateConsignmentMovementInput"; import { Repository } from 'typeorm'; const ConsignmentMovementBaseResolver = EntityBaseResolver({ suffix: "ConsignmentMovement", tableName: "consignment_movements", entity: ConsignmentMovement, createInputType: CreateConsignmentMovementInput, updateInputType: UpdateConsignmentMovementInput, }) @Service() @Resolver(of => ConsignmentMovement) export class ConsignmentMovementResolver extends ConsignmentMovementBaseResolver{ constructor( @InjectRepository(Coordinates) private readonly coordinatesRepository: Repository<Coordinates>, @InjectRepository(Address) private readonly addressRepository: Repository<Address>, @InjectRepository(ContainerOnPlatform) private readonly containerRepository: Repository<ContainerOnPlatform> ) { super() } @FieldResolver() async start_coords(@Root() consignment: ConsignmentMovement): Promise<Coordinates> { return (await this.coordinatesRepository.findOne(consignment.start_coord_id))!; } @FieldResolver() async end_coords(@Root() consignment: ConsignmentMovement): Promise<Coordinates> { return (await this.coordinatesRepository.findOne(consignment.end_coord_id))!; } @FieldResolver() async start_address(@Root() consignment: ConsignmentMovement): Promise<Address> { return (await this.addressRepository.findOne(consignment.start_address_id))!; } @FieldResolver() async end_address(@Root() consignment: ConsignmentMovement): Promise<Address> { return (await this.addressRepository.findOne(consignment.end_address_id))!; } @FieldResolver() async container(@Root() consignment: ConsignmentMovement): Promise<ContainerOnPlatform> { return (await this.containerRepository.findOne(consignment.container_id))!; } }

B : The Data specificity & the speed

- TypeGraphQL allows us to query for only the data that is required from the endpoint, hence,

- Response handling on client side is much smoother during Continuous Integration

- Data packets downloaded & transferred are lesser, making the load on the server & database extremely optimized

- This data specificity also helps with the speed of query execution, giving a latency of 52 miliseconds which is 300% faster than any contemporary technology

- Lastly, this technology also sped up development cycle of data-specific CRUD endpoints on our models from a day to 10 minutes (with API testing) which accelerated the feature delivery.

PART 2 : Authentication, Authorization, MFA & Session Management with Redis

A : Choosing Redis

- Redis is the in-memory database solution in the current architecture that handles the entire authentication, authorization, role-based access control & session management of user using the SaaS

- All Redis data resides in memory, which enables low latency and high throughput data access. Unlike traditional databases, In-memory data stores don’t require a trip to disk, reducing engine latency to microseconds. Because of this, in-memory data stores can support an order of magnitude more operations and faster response times. The result is blazing-fast performance with average read and write operations taking less than a millisecond and support for millions of operations per second.

B : The System Setup

-

The Logistics Lab authentication kicks off with the user registering & their entry being created in the Postgres database, as well as Redis token that gets generated for enabling Multi-Factor Authentication

-

The user receives an email with the URL containing the token that when submitted & verified by Redis, unlocks the user account for Login & other subsequent activity

-

For the email sending, an SMTP server was configured with the necessary credentials

-

Post-login, the user’s Role [

Operator,Admin, orDriver] restricts the endpoints they can be consuming, as per the middlewares passed to the endpoints. -

Following is sample where Authentication was setup & Roles were defined, with master access for the

Adminroleexport const isAuthn: MiddlewareFn<AppContext> = async ({ context }, next) => { const userId = (context.req.session! as any).userId const user = await getRepository(UserAccount).findOne(userId); if (!userId) { throw new Error("not authenticated"); } return next(); } const isAuthz = (role: Role): MiddlewareFn<AppContext> => async ({ context }, next) => { const userId = (context.req.session! as any).userId const user = await getRepository(UserAccount).findOne(userId); if (!userId || (user?.role !== role && user?.role !== Role.Admin) ) { throw new Error("You are not authorized to perform this action"); } return next(); }; export const isDriver = isAuthz(Role.Driver); export const isAdmin = isAuthz(Role.Admin); export const isOperator = isAuthz(Role.Operator); -

To pass middleware on an endpoint, we simply use the

@UseMiddleware()decorator on the method & pass in first, the criteria for authenticating, & then an array of middlewares which are the roles that are authorized to access given endpoint.

Here’s a snippet of securing a Query defined in theEntityBaseResolverusing our authentication & authorization middleware@Query(() => [entity], { name: `getAll${suffix}` }) @UseMiddleware(isAuthn,...(middleware || [])) async getAll():Promise<typeof entity[]> { let connection = await getConnection() .createQueryBuilder(entity, tableName) return connection.getMany() } -

By default, Redis tokens for user sessions end after a month. Can be modified as suited.

-

Systems have also been incorporated to help user regain & reset their password using their email address & a separate Redis token for the same. The code for the same is found in the

[UserAccountResolver](https://github.com/Logistics-Lab/typegraphql-api/blob/master/backend/src/modules/userAccount/UserAccount.resolver.ts)file

PART 3 : Spatial Data Generation & Storage

-

A big part of this project was data generation.

-

Sample data generation for the Postgres database tables was done using https://mockaroo.com and a bunch of NodeJS scripts & stored in a Google Sheets spreadsheet.

-

Apart from that, as per the algorithms team requirement, a system needed to be developed for returning points of interest in a given search radius, for working of routing & packing algorithms for the same

-

After much research on Google Maps API & MapMyIndia, Google Maps API was chosen owing to its accuracy, ease of integration & pricing model

-

However, for repeated queries, to prohibit extra cloud spend, each document for each location we get back from Google on the first query, was cached into a MongoDB database so that :

- subsequent similar query would be faster,

- Subsequent similar query would be free of cost

- With more research, more valuable spatial data would be easily available & structured in our own custom database

-

To combat the pagination Google Maps API enforces, a intelligent debouncing service was written for each call to the next page & for the same request, all results from all pages were aggregated & stored into MongoDB

Following is the code for the intelligent service that the our spatial endpoints consume to :- fetch data directly from MongoDB (for repeat queries) OR

- populate MongoDB with data from Google Maps & then fetch from it

const locationService = async(city:string, buildingType:string, resultMapper:(results:any[])=>any[]) => { try { await mongoose.connect(`mongodb://${process.env.MONGO_INITDB_ROOT_USERNAME}:${process.env.MONGO_INITDB_ROOT_PASSWORD}@mongodb:27017/spatial-db?authSource=admin`); const results = await Location.find({ city: city, buildingType: buildingType }).exec() if (results.length > 0) { console.log('coming from mongodb directly', results.length) return resultMapper(results) } else { console.log('saving to mongodb first') storeResultsInMongo(city, buildingType); await sleep(10000); const results = await Location.find({ city: city, buildingType: buildingType }).exec() console.log('coming from mongodb indirectly', results.length) return resultMapper(results) } } catch (error) { console.log('error connecting to mongodb, so proceeding with google maps API', error) throw new Error('could not fetch results'); } } const sleep = (ms:number) => { return new Promise((resolve) => { setTimeout(resolve, ms); }); } const storeResultsInMongo = async (city: string, buildingType: string) => { const queryToGMapsAPI = `https://maps.googleapis.com/maps/api/place/textsearch/json?key=${process.env.GOOGLE_PLACES_API_KEY}&query=${buildingType}%20in%20${city}&type=${buildingType}` const resFromGmaps = await axios.get(queryToGMapsAPI) as any let results = resFromGmaps.data.results; results.forEach(async (result: any) => { const location = new Location({ city: city, buildingType: buildingType, ...result }); await location.save(); }) let nextPageToken = resFromGmaps.data.next_page_token while (nextPageToken && nextPageToken.length > 0) { try { await sleep(1000); const nextQuery = `${queryToGMapsAPI}&pagetoken=${nextPageToken}` console.log(nextQuery, 'is ready') const nextResFromGmaps = await axios.get(nextQuery) as any let newRresults = nextResFromGmaps.data.results; let newStatus = nextResFromGmaps.data.status; newRresults.forEach(async (result: any) => { const location = new Location({ city: city, buildingType: buildingType, ...result }); await location.save(); }) nextPageToken = nextResFromGmaps.data.next_page_token console.log(newStatus, nextPageToken) } catch (error) { console.log('No more paginated responses', error) } } } export default spatialAPIRouter; -

MongoDB was chosen to provide the flexibility of noSQL since spatial data is still quite inconsistent & a column-rigid SQL option would prevent the use case from scaling

-

On top of MongoDB various Express API endpionts were exposed for querying different fields of the collected data, as per the utility. Proper documentation, environment setup & test requests for the same have been provided in our this API collection

PART 4 : Continuous Integration / Continuous Deployment

-

Any software at scale is equipped with this key engineering milestone to accomodate exponential growth in usage, feature development cycles & fault-tolerant product rollouts

-

The diagram shown above takes exactly 2 minutes to setup for any developer onboarding the code, irrespective of the machine it is being operated upon since the entire business logic, environment configuration & failure orchestration

-

The diagram shown below helps setup a fresh server for handling production load for our entire system in 10 minutes. This is the power of containerization technology & Infrastructure as Code concepts well-integrated into this architecture

A : Containerization with Docker

- To ensure maximum flexibility and replication of the code across development, staging & production environments, the business logic server was containerized into a movable block of code containing all the configuration details of its function inside it

- With any machine running the docker-daemon, the code will function exactly the same irrespective of the OS, File System management or other services that differ across computers.

B : Multi-Container Management with Docker-Compose

-

All the systems mentioned - Business Logic Server, Redis Database, MongoDB database & Postgres Database - run as containers through their respective Docker images

-

All these Docker containers run in the exact same network making the communication between them seamless & replicable very easily in any environment

Name Phase Requirement Solution Business Logic Server Development Code should be mapped to a file system volume inside development machine & support hot module reloading. Created a bind mount that symlinks the container to the host development machine so that developer can immediately observe their changes & how they impact the entire system Imported packages of the Container must be specified on host when developing An anonymous docker volume was created to handle imported packages inside container work well during development with local code changes Staging Docker image must be built & pushed to mimick production behavior Docker image with production configuration is built & pushed to container registry at Docker Hub after simulating production behavior on local Production Code running must be equipped with the latest feature rollouts Cron job running at 2AM IST everyday that reboots the system with the latest code (downtime - 45 seconds, healthcheck alerts in place) All Environment configurations must be stored separately from business logic Dedicated environment files providing configuration during container build time to switch between mimicking environment behavior seamlessly Redis DB, Mongo DB, Postgres DB All Data persistence across container reboots in case of container crashes Setup using a named volume between the database & the host machine so data will never be lost -

Here’s the code sample for the

docker-composefile that orchestrates this entire setup in around 2% of the time taken by a traditional system setup & software update rollout.version: '3.8' services: postgresdb: image: postgres restart: always volumes: - postgresdata:/var/lib/postgresql/data env_file: - '../../env/postgres.env' redisdb: image: bitnami/redis ports: - '6379:6379' volumes: - 'redisdata:/bitnami/redis/data' env_file: - '../../env/redis.env' mongodb: image: 'mongo' volumes: - mongodata:/data/db env_file: - ../../env/mongo.env api-server: build: context: '../../backend' dockerfile: 'dev.dockerfile' restart: always ports: - '8080:8080' volumes: - '../../backend:/app' - /app/node_modules env_file: - '../../env/dev.api-server.env' - '../../env/postgres.env' - '../../env/redis.env' - '../../env/mongo.env' depends_on: - postgresdb - redisdb volumes: postgresdata: redisdata: driver: local mongodata: -

GitHub actions were set in place to trigger updating the

latesttagged container on our Container Registery everytime a major version of the API was released -

Webhooks were setup to auto-generate documentation & update Postman Schema everytime the code on the remote was updated.

-

Thus, using this well-crafted end-to-end system for feature delivery, the entire project encompassing such a high-intensity & widespread use case was bootstrapped & architected for scale in a matter of 3 months.

Afterword

As a kid, I'd always been amazed by how stakeholders in a system can be treated as nodes in a graph & here, just creating something so pure, scalable & powerful was a really cool journey.

I remember my heart thumping as I executed the complex graph query on the Consignment entity in the system, or when running docker-compose up in my production server, and the smile that came after months of pouring my soul into architecting something I just conceived of as a cool project.

Fun thing is, I can't wait to do it all over again !